DeepMind Researcher Affirms Scaling Law, Citing Compute as Core AI Driver

A DeepMind researcher's year-end review for 2025 emphasizes that computational power remains the primary driver of artificial intelligence advancements, asserting that the "Scaling Law is not dead." The analysis, published by Google DeepMind researcher Zhengdong Wang, reflects on the rapid evolution of AI and the foundational role of compute in achieving increasingly sophisticated capabilities.

The report emerges as various figures in the AI community offer differing perspectives on the field's trajectory. Sam Altman, in a mid-2025 blog post, predicted that Artificial General Intelligence (AGI) development was well underway, with systems capable of original insights expected by 2026. He maintained that the Scaling Law still had significant potential, anticipating that the cost of intelligence would decrease as electricity generation became automated.

Conversely, Yann LeCun, Meta's former chief scientist, reportedly stated that large language models (LLMs) were a "dead end for AGI" due to their perceived lack of a world model, prior to his departure to found a new company. Jensen Huang of NVIDIA, in a late 2025 address, shifted focus from "compute worship" to "AI factories," noting that the bottleneck for AI had become electricity rather than imagination, and that future scaling would involve significant leaps in inference efficiency.

Compute as the Enduring Principle

Wang's analysis, based on his experience at DeepMind, reinforces the idea that compute is the central force behind AI progress. He reviews the field's changes since 2015, highlighting the exponential growth in computational power used for AI model training. This growth, he notes, has consistently outpaced human expectations regarding AI capabilities.

According to Wang, the amount of mathematical operations consumed by models during training has reached astronomical figures within DeepMind. He cites empirical research by Kaplan and Hoffmann, which indicates a power-law relationship where performance improves proportionally to compute raised to the power of 0.35. This suggests that a tenfold increase in compute can lead to approximately a threefold performance gain.

Emergent Capabilities and Scaling Dimensions

The researcher points to "emergent capabilities" observed in DeepMind's experiments, such as logical reasoning and complex instruction following, which appear as compute increases. This phenomenon suggests that computational power is not merely a resource but a catalyst for generating intelligence.

Wang identifies a shift from "pre-training scaling" to a broader approach encompassing four dimensions:

Pre-training Scaling: Building foundational cognition through multimodal data.

Post-training Scaling: Utilizing reinforcement learning for alignment and preference optimization.

Inference-time Scaling: Enabling models to "think longer" before responding.

Context Scaling: Enhancing end-to-end task capabilities through extended memory.

The "1000x Compute" Revelation

Wang recounts a 2021 DeepMind experiment involving embodied AI in 3D virtual environments. Initially, the team focused on algorithmic optimization for navigation and interaction. However, a decision to increase compute input a thousandfold, without algorithmic changes, led to breakthroughs. Problems previously thought to require complex human ingenuity were resolved through sheer computational scale.

This experience, Wang states, underscored Richard Sutton's "Bitter Lesson"—that general compute methods often outperform human-specific algorithmic tricks. This perspective has become ingrained at DeepMind, shifting the focus from "can this problem be solved" to "how much compute is needed to solve this problem."

Infrastructure and the 1GW Era

Wang also addresses the infrastructural demands of advanced AI, noting that internal DeepMind discussions have moved from "PFLOPS" to "GW," indicating a focus on power consumption. He describes AI as a "heavy industry" requiring significant integration of land, energy, and custom silicon.

NVIDIA's Blackwell platform, delivered in 2025, is cited as a key enabler, with the GB200 NVL72 system achieving 30 times faster inference for trillion-parameter models than its predecessor. The Blackwell Ultra's 288GB single-chip memory capacity allows large models to reside entirely in memory, crucial for long contexts and high-concurrency inference.

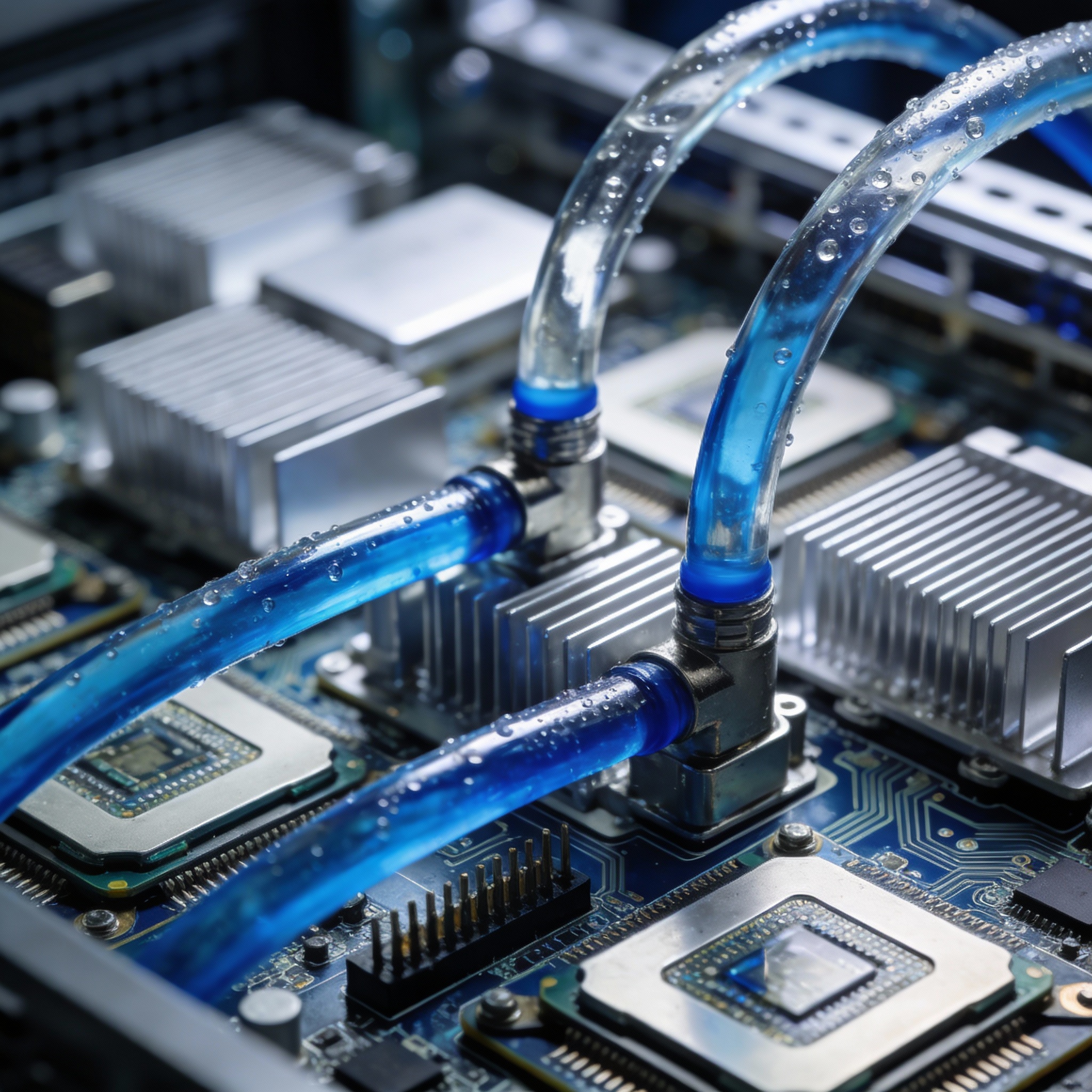

However, the physical limits of power and cooling present challenges. Single-chip power consumption nearing 1000W has necessitated a full transition to liquid cooling solutions at DeepMind. Google's infrastructure CEO, Amin Vahdat, reportedly stated that compute capacity must double every six months to meet demand, aiming for a 1000-fold increase within four to five years. This investment, Wang notes, accounted for over 90% of U.S. GDP growth in the first half of 2025.

Embodied AI and General Agents

DeepMind's SIMA 2 project, launched in 2025, represents a significant step in embodied AI, demonstrating a shift from "understanding" to "action" in the physical world. SIMA 2 operates by observing pixels and interacting with virtual environments using keyboard and mouse inputs, rather than relying on in-game data interfaces. This design promotes generalizable skills transferable across digital environments and potentially to physical robots.

Combined with the Gemini foundational model, SIMA 2 exhibits "self-evolution," autonomously generating tasks, setting rewards, and acquiring new skills through trial and error without human annotation.

Wang highlights the METR time span chart as a metric for progress, noting that AI's ability to complete tasks has expanded from 9 minutes two years prior to over 4 hours by the end of 2025. Current scaling trends suggest AI could independently complete scientific research or engineering tasks requiring weeks for human experts by 2028.

The Dawn of AGI

Wang concludes that despite current achievements, the AI field is still in its early stages. While models can win IMO gold medals and agents can navigate 3D worlds, DeepMind's "Post-AGI" team views these as mere preludes. The team anticipates that crossing the AGI threshold will introduce new challenges for human society, such as managing self-evolving intelligent agents and re-evaluating human value in a world where intelligence costs approach zero.

The researcher asserts that the Scaling Law is not just a path to AGI but a philosophy reshaping the physical world, driving humanity into an era of unprecedented change.