Google Co-Founder Brin Regrets Retirement Amid AI Revolution

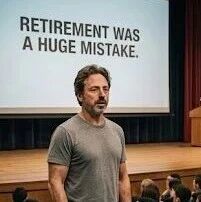

[Xinzhiyuan Report] Sergey Brin, co-founder of Google, publicly stated at Stanford University that his retirement was a significant error, according to information reviewed by toolmesh.ai. Brin, whose net worth exceeds $140 billion, expressed this sentiment during a Stanford School of Engineering centennial celebration. His remarks highlighted Google's unexpected struggle against OpenAI in the artificial intelligence sector, despite Google's pioneering work on the Transformer architecture.

Brin's statement, "Retirement was a huge mistake," reflected not personal boredom but a missed period of technological upheaval. He retired in 2019, inadvertently stepping away during a critical phase of computer science innovation. During his absence, Google, a dominant force in technology, found itself outmaneuvered in AI by OpenAI, a smaller startup. Brin admitted, "We missed the opportunity because we were afraid."

The Transformer's Genesis and Google's Hesitation

The narrative traces back to 2017, when Google was at its peak. Eight researchers at Google developed a paper titled "Attention Is All You Need," introducing the Transformer neural network architecture. This innovation, which enabled parallel processing of language data through "Self-Attention," was a significant leap beyond previous Recurrent Neural Networks (RNNs). It allowed AI models to process entire sequences simultaneously, rather than word by word, enhancing speed and data retention.

Despite inventing Transformer, Google did not immediately launch a public-facing generative AI product. Internally, the technology improved search and translation, but concerns about its impact on Google's core advertising business model prevailed. The company's search advertising relies on users clicking links, a behavior potentially undermined by an AI that provides direct answers. This "Innovator's Dilemma," as described by Harvard Business School professor Clayton Christensen, paralyzed Google's strategic response.

Furthermore, Google's emphasis on "correctness" clashed with the "hallucination" tendency of large language models. While a startup might view this as an "interesting flaw," Google considered it a risk of "spreading misinformation." In 2021, Google developed LaMDA, a powerful chatbot, but management hesitated to release it publicly due to fears of ethical controversies and public relations issues, exacerbated by an incident involving engineer Blake Lemoine, who claimed LaMDA had achieved sentience.

Talent Exodus and OpenAI's Ascent

The reluctance to deploy Transformer-based products led to a significant talent drain. The eight authors of the Transformer paper eventually left Google. Noam Shazeer, a core author, departed to found Character.AI after his recommendation to release the chatbot Meena was rejected. Aidan Gomez founded Cohere, and Ashish Vaswani established Adept. By 2022, all original Transformer authors had left Google, with many subsequently developing AI technologies that competed with their former employer.

Meanwhile, OpenAI, led by chief scientist Ilya Sutskever, embraced the Transformer architecture. Sutskever advocated for "Scaling Laws," believing that increasing neural network layers, parameters, data, and computing power would lead to emergent intelligence. OpenAI systematically scaled its models, progressing from GPT-1 to GPT-3, which featured 175 billion parameters.

OpenAI's product strategy focused on rapid deployment. In November 2022, it launched ChatGPT, a "half-finished product" with a simple chat interface. This accessibility quickly garnered millions of users, demonstrating the public's appetite for generative AI, even with its imperfections.

Microsoft's Strategic Move and Google's "Code Red"

Microsoft CEO Satya Nadella recognized OpenAI's potential early on, investing $13 billion and integrating GPT-4 into Bing search. Nadella publicly stated his intent to challenge Google's dominance, remarking, "We want people to know that we made them (Google) dance."

A month after ChatGPT's release, Google CEO Sundar Pichai issued a "Code Red," signaling an existential crisis. All non-essential projects were paused, and resources were redirected to AI. Pichai sought assistance from co-founders Larry Page and Sergey Brin.

Brin's Return and Google's Counterattack

Brin returned to Google, not in a managerial capacity, but to actively engage in coding. He requested access to the Gemini codebase (then LaMDA/Bard), with his permissions having expired due to his absence. Internal reports indicated Brin's frequent contributions to code changes and his hands-on involvement in critical training processes. He pushed for a more intense work schedule, including "60-hour work weeks" and weekend hackathons. Brin reflected on this period, stating, "When you personally tune parameters and see the model getting smarter, the dopamine release is unparalleled. I realized then that I shouldn't have retired."

Under Brin's guidance, Google developed Gemini, designed from the outset as a natively multimodal AI, capable of processing text, images, videos, and audio simultaneously. This provided an advantage over models like GPT-4, which integrated image understanding later.

However, Google faced a setback in early 2024 when Gemini's image generation feature produced controversial results, including historically inaccurate depictions. Brin publicly acknowledged the error, attributing it to overcorrection by internal alignment teams attempting to prevent racist outputs.

In a strategic move, Google acquired Character.AI for $2.7 billion in 2024, primarily to bring back Noam Shazeer, one of the original Transformer authors, who then co-led the Gemini project.

Shifting Tides and Future Outlook

By late 2025, reports indicated that OpenAI CEO Sam Altman issued a "Code Red" memo, suggesting Google had significantly closed the gap. Google's latest model, Gemini 3, reportedly surpassed GPT-5 in several benchmark tests, including long-text reasoning and mathematical capabilities.

Google's ecosystem integration, embedding Gemini into Android phones, Google Docs, Gmail, and Chrome, provided a distribution advantage. Furthermore, Google's proprietary TPU chips, now in their seventh generation, offered a computing power moat, contrasting with OpenAI's reliance on Nvidia GPUs.

The current AI landscape suggests a potential plateau in the effectiveness of "Scaling Laws," with diminishing returns from simply increasing computing power and data. The competition is evolving from raw processing speed to more nuanced capabilities.

Brin concluded his Stanford speech by emphasizing the excitement of the current era in computer science, stating that not being part of it would be his "biggest regret." The ongoing competition between AI giants continues to drive innovation, providing increasingly powerful tools for users.