Seko AI Video Tool Integrates Multi-Episode Creation, Mainstream Models

Seko, an AI video tool, has introduced updates including multi-episode creation support and integration with external image and video models. The platform aims to streamline the AI video production workflow from scriptwriting to editing.

The tool's developers state that Seko functions as a creative agent, connecting various stages of video production. This approach is intended to provide an end-to-end solution for users, reducing the need to switch between different tools during the creative process.

Key Updates and Features

Seko's recent updates include several enhancements. The platform now supports multi-episode creation, allowing for continuity in character appearance and scene settings across different video segments. This feature addresses challenges in maintaining consistency in serialized content.

The tool has also integrated various mainstream image and video models, such as Keling and Vidu, alongside its proprietary models. This integration provides users with a broader selection of generative AI capabilities directly within the Seko environment.

Furthermore, Seko emphasizes a one-stop user experience, aiming to simplify the workflow from content generation to final editing. This design is intended to enable creators to focus on narrative development rather than tool-specific processes.

Workflow and Operation

Seko's workflow begins with story inspiration. Users can input initial ideas, which the platform expands into a script. Alternatively, detailed scripts can be uploaded. The tool also facilitates the creation of subjects and characters, supporting both local uploads and AI generation to maintain consistency across the narrative.

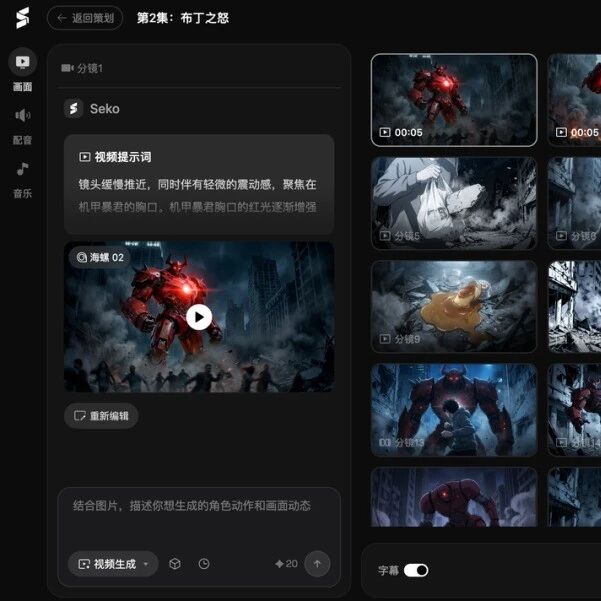

After defining subjects, users can input script inspiration and activate a multi-episode option for continuous stories. Seko then generates an outline for the first episode, including a story synopsis, art style, subject list, and storyboard script. Modifications can be made through a dialogue box. Subsequent episodes are created through a similar process, maintaining narrative flow.

For video creation, users select an image model and aspect ratio, then generate storyboard images. The platform offers options for image modification, voiceover, and background music. Minor image adjustments can be made using a "Reference Modification" feature, while significant changes can be implemented via a "Re-edit" function that allows direct modification of prompts.

Voiceover functionality includes options for voice selection and emotional adjustments. The tool also allows users to call up previously defined subjects to modify images, ensuring character consistency. Once images are finalized, they can be converted into video clips. This process includes prompts for end-frames and selection of video models and duration. A "One-click Convert to Video" option is available for episodes with numerous shots.

After video and voiceover generation, users can select background music provided by Seko or upload their own. The final video can be exported, along with all storyboards. The storyboard view offers a clear interface for managing shots, facilitating the addition, reordering, and deletion of scenes.

Industry Context

The approach taken by Seko aligns with industry observations regarding the AI video generation market. Instead of a single dominant model, various models are specializing in different niches. Seko's strategy involves refining its own models while integrating external leading technologies, and building an application layer to enhance the creative process.

This integration aims to provide a cohesive workflow that allows creators to focus on storytelling rather than managing disparate tools. The platform's developers suggest that this direction could shape future AI video creation, where models evolve, tools become more comprehensive, and creators articulate ideas for system execution.

SenseTime's self-developed image generation models, Seko Image and Seko IDX, were made available for free use for one week, concluding on December 17.